Most computer vendors flood the marketplace with more or less sophisticated blade solutions. Remark: Blade solutions well differentiated from blade servers.

So here some musings on blades and why I tend to differentiate between basic blade servers and the more sophisticated approach.

Basic blade concepts primarily convince, considering all the same aspects:

- Optimized footprint aka rackspace

- Reduced cabeling

- Energy efficiency

- Virtualized installation media

- Cooling efficiency

- Central management and maintenance

- Easy hardware deployment and service

To whatever extend the different breeds address the different issues, these primarily are seem to be the supperficial quality criteria and argued in many decission processes, missing the core scope of the discussion. These topics are covered in the mainstream publications and are measured as the grade of quality of the “solution”.

From my point of view this is completely out of scope.

The real value of blades is interconnect virtualisation together with the so called “stateless server” approach. No surprise that many vendors try to keep the discussion on the less important facts, since according to my labs and evaluations only three major vendors even understood the issue. Depending on their legacy obligations they have more or less radical approaches to reach the goal.

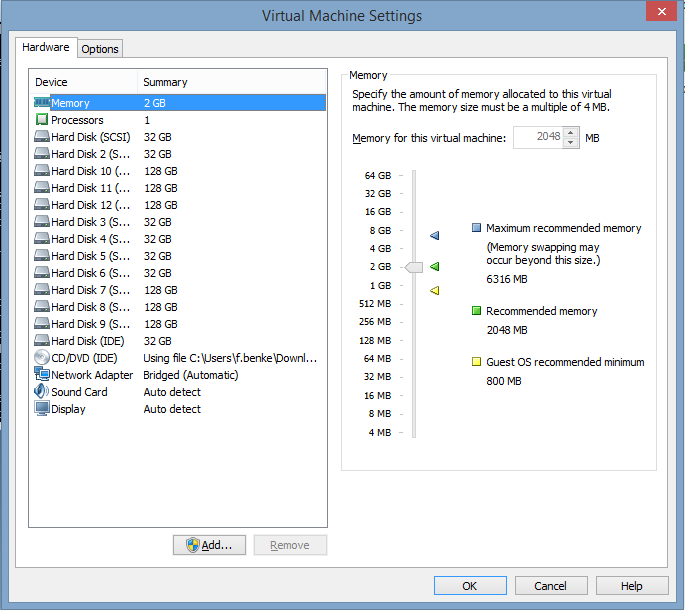

The goal is to generate a server personality, its identity in terms of technical aspects, dynamically by application of a so called service- or server- profile. This profile contains the different aspects of the individual server identity such as e.g.:

- BIOS version

- Other firmware version, e.g. HBA or NIC

- WWNs for port and node

- MAC addresses

- Server UUID

- Interface assignements

- Priorities for QoS and power settings

Accroding to these profiles and derived from pools of IDs, MACs, WWNs the servers identity is generated dynamically and assigned during a so called provisioning phase. Then the blade server is available for installation.

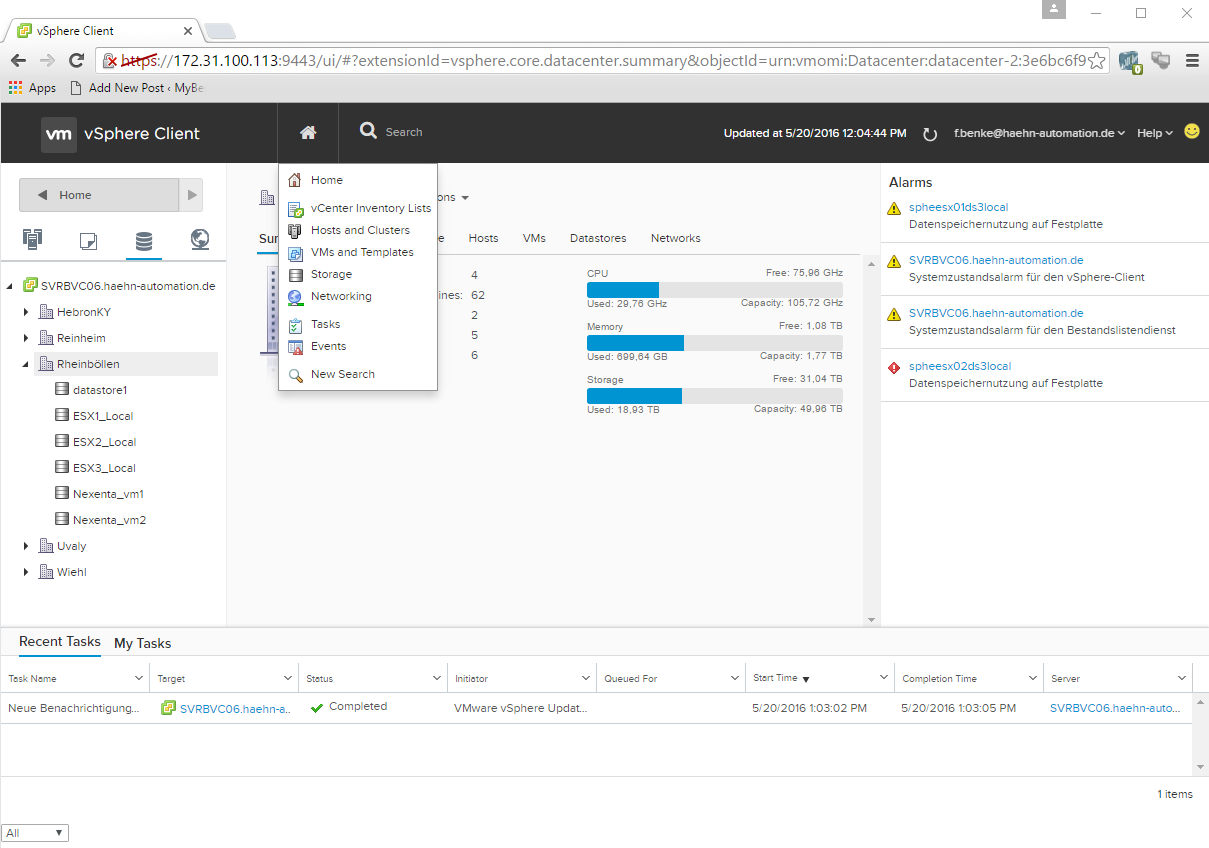

This approach allows to “on the fly” generate a server personality, that serves dedicated needs as for instance an VMWare vSphere server or an database server. Furthermore if more servers from the same type are needed the profiles my be cloned or derived from ma template so that new rollouts are quick and easy. In case of failiure or desaster recovery the profiles may even roam to other, not yet personalized servers and asuming a boot from SAN or boot from iSCSI scenario failed servers are back in minutes, transarent to even hardware based licensing issues.

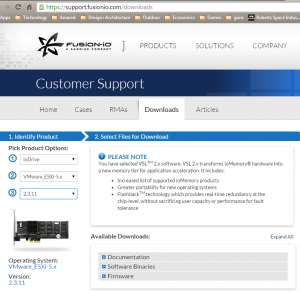

Derived from specially the need for flexible interconnect assignment the classical approach of dedicated Ethernet or Fibrechannel switch modules in a blade infrastructure is of no further use any more. The classical approach needs dedicated interconnects at dedicated blade positions which is exactly the limitation a service profile wants to overcome.

With converged infrastructure, the support of FCoE and data center bridging as well as the according so called converged network adapters, thi limitation has been overcome on the interconnect side. Here the interconnect is configured in an appropriate way to cover the server side settings and assigns dynamically different NIC and HBA configurations to the single blade. Even more the connections may apply QoS or bandwith reservation settings and implment high connection availability in a simplyfied manner.

Based on that far advanced and very modern hardware operation concepts are possible. Only blade concepts, that support the full range of this essentially decoupling from hardware and service role, deserve the name “solution”. Anything else is “me too”.

Some posts on howtos from my previous evaluation and installation projects will follow. Some readers may remember my old blogg 😉